Understanding Large Point Clouds and Their Challenges

Large point clouds are high-density collections of 3D spatial data points captured using technologies like LiDAR or photogrammetry. They’re widely used in:

- AEC projects for as-built documentation and design validation

- Surveying and mapping for topographic and infrastructure analysis

- Heritage preservation for capturing the precise geometry of historic sites

While invaluable for accuracy, large point clouds can be notoriously challenging to manage. Their massive file sizes, often reaching hundreds of gigabytes, can strain both hardware and software.

Common issues include:

- Prolonged loading times

- Lag during navigation or editing

- Frequent software crashes

Such disruptions can derail productivity, delay deliverables, and increase project costs. In time-sensitive Scan to BIM workflows, even a single crash can result in hours of lost progress.

This article shares seven expert, field-tested tips to help you handle large point clouds efficiently, reducing crashes, improving processing speed, and ensuring smoother project execution.

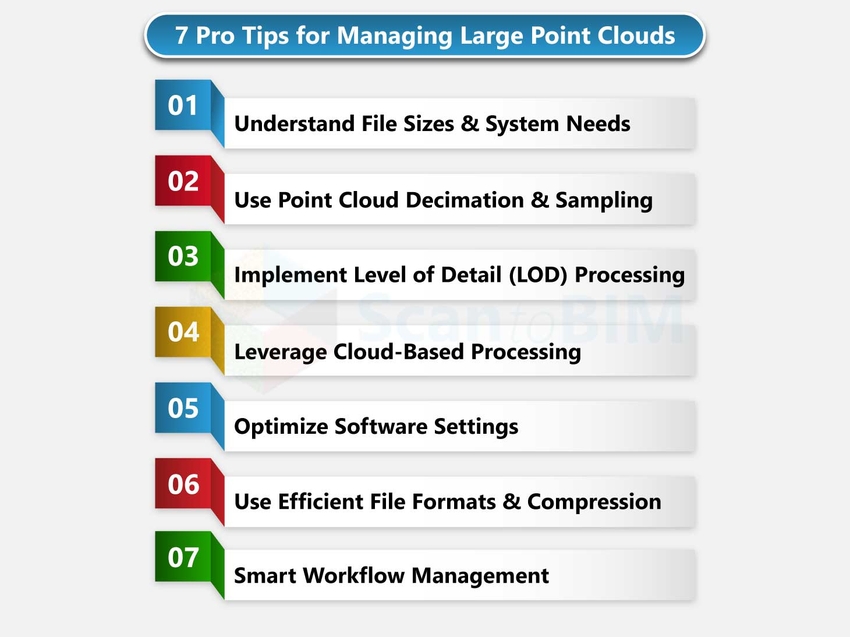

7 Proven Tips for Managing Large Point Clouds

Handling massive point cloud datasets doesn’t have to mean endless lag or unexpected crashes. By combining smart data management practices with the right hardware setup, you can streamline workflows, improve software stability, and keep projects on track.

Let’s explore seven proven strategies to help you work faster and more efficiently.

Tip 1: Understanding Point Cloud File Sizes and System Requirements

Before diving into optimization techniques, it’s essential to understand how point cloud size directly impacts system performance.

1. Typical File Sizes

- ** LiDAR scans:** Depending on resolution and coverage, datasets can range from 1–5 GB for small interior spaces to 100+ GB for large infrastructure projects.

- ** Photogrammetry-derived clouds:** Often denser than LiDAR outputs, easily exceeding 200 GB for high-detail, multi-angle captures.

2. Hardware Requirements by Dataset Size

- Small projects (5 GB) – Can run on standard workstations with 16 GB RAM, mid-tier GPU, and SSD storage.

- Medium projects (5–50 GB) – Require 32–64 GB RAM, dedicated GPU with 6–8 GB VRAM, and NVMe SSD for fast read/write.

- Large projects (50+ GB) – Ideally processed on high-performance workstations with 128 GB+ RAM, high-end GPU (12–24 GB VRAM), and multi-terabyte NVMe SSDs.

3. Memory vs. Storage

- RAM controls how much of the point cloud can be processed in real time. Insufficient RAM causes excessive swapping, slowing performance.

- Storage speed impacts file loading, saving, and navigation smoothness. Therefore, SSD or NVMe drives are essential.

By matching your hardware capabilities to the dataset size, you can prevent bottlenecks before they occur. This proactive step lays the foundation for smooth point cloud management in all subsequent workflows.

Tip 2: Use Point Cloud Decimation and Sampling

Large point clouds often contain far more data than is necessary for design, analysis, or visualization. Decimation and sampling reduce file size without compromising essential accuracy, improving both performance and stability.

1. When to Use Decimation

- When the dataset’s density exceeds project requirements. For example, an indoor scan at 2 mm spacing, but only 10 mm accuracy is needed.

- Prior to importing into BIM, CAD, or visualization software with limited memory handling.

- For sharing datasets with collaborators who don’t require full-resolution detail.

2. Sampling Strategies That Preserve Data Quality

- Uniform sampling – Reduces points evenly across the dataset, keeping overall geometry consistent.

- Spatial filtering – Removes redundant points in flat or low-detail areas while retaining high-density in complex zones.

- Region-based reduction – Apply stronger decimation in secondary areas while keeping critical zones in high resolution.

3. Tools and Software for Point Reduction

- CloudCompare – Free, open-source decimation and filtering tools.

- Autodesk Recap – Region-based point density control during export.

- Leica Cyclone / Trimble RealWorks – Advanced sampling filters for survey-grade data.

By applying decimation strategically, you can dramatically cut processing times while preserving the precision that matters most.

Tip 3: Implement Level of Detail (LOD) Processing

Level of Detail (LOD) processing ensures your software only loads the amount of point cloud data necessary for the current view, dramatically improving performance. By scaling detail dynamically, you can navigate and edit massive datasets without overloading your system.

Understanding LOD Hierarchies

- High LOD – Maximum density for close-up work, inspections, or precision measurements.

- Medium LOD – Balanced detail for general modeling and design coordination.

- Low LOD – Minimal detail for navigation, overviews, and preliminary alignment.

Switching between these levels based on your workflow reduces GPU and RAM strain.

Octree and Other Spatial Data Structures

- Octree indexing divides the point cloud into cubic “cells,” enabling the software to load only visible segments.

- Alternative structures like k-d trees or voxel grids offer similar benefits in organizing spatial data for fast retrieval.

Progressive Loading Techniques

- Load coarse data first, then refine with higher detail as you zoom in.

- Many modern tools like Autodesk Recap, Bentley ContextCapture, etc., implement streaming-based LOD, loading points on demand instead of all at once.

By leveraging LOD processing, you balance visual quality with system efficiency, making even multi-billion-point datasets responsive and manageable.

Tip 4: Leverage Cloud-Based Processing Solutions

Cloud-based processing allows you to handle massive point cloud datasets without relying solely on local hardware. By offloading computation to powerful remote servers, you can process, visualize, and share large files faster and more efficiently.

Benefits of Cloud Computing for Point Clouds

- Scalability – Instantly access high-performance computing power for heavy datasets.

- Collaboration – Share and review point clouds in real time with distributed teams.

- Storage efficiency – Store terabytes of data securely without overloading local drives.

- Accessibility – Work from multiple devices without transferring huge files manually.

Popular Cloud Platforms and Services

- Autodesk Construction Cloud / BIM 360 – For hosting and collaborative viewing.

- Pix4D Cloud – For photogrammetry processing and sharing.

- Bentley ProjectWise / iTwin – For large-scale infrastructure datasets.

- Amazon Web Services (AWS) & Microsoft Azure – Custom HPC (High-Performance Computing) environments for point cloud processing.

Cost Considerations and When to Use Cloud Processing

- Cloud services often operate on pay-as-you-go or subscription models.

- Ideal for short-term, high-computation projects where buying hardware isn’t cost-effective.

- Evaluate recurring costs vs. investment in a powerful local workstation for frequent, long-term processing.

By strategically using cloud solutions, you can bypass local system limits, accelerate workflows, and enable seamless multi-user collaboration on even the largest datasets.

Tip 5: Optimize Your Software Settings

Even with powerful hardware, poor software configuration can slow down Point Cloud to BIM Services workflows or cause crashes. Fine-tuning settings for memory usage, rendering, and processing can dramatically improve stability and speed.

Memory Management Settings

- Allocate the maximum available RAM to your point cloud application if the software allows.

- Enable disk caching to handle datasets larger than system memory.

- Regularly purge temporary files to free up resources.

Display and Rendering Options

- Lower point size or density in display mode for smoother navigation.

- Disable advanced shading, lighting, and high-quality textures when not needed.

- Use bounding boxes or placeholders for large, off-screen regions.

Background Processing Configuration

- Turn off unnecessary background tasks like real-time indexing or automatic backups during active editing.

- Schedule heavy processing tasks like meshing, classification, etc., to run during downtime.

Software-Specific Optimization Tips

- Autodesk Recap – Use Region Manager to hide unneeded scans.

- CloudCompare – Activate “Show Octree” mode for faster visualization.

- Bentley Pointools – Reduce viewport quality for navigation, then increase it for exports.

Proper software optimization ensures you’re using available resources efficiently—helping large point clouds run smoothly without draining system performance.

Tip 6: Use Efficient File Formats and Compression

The file format you choose for storing and sharing point clouds can significantly impact performance, storage space, and compatibility. Selecting the right format, paired with smart compression, keeps datasets manageable without sacrificing accuracy.

Comparing Point Cloud File Formats (LAS, PLY, PCD, etc.)

- LAS/LAZ – Industry-standard for LiDAR; LAZ is the compressed version, offering 5–10× smaller file sizes.

- PLY – Supports color and other attributes; widely used in 3D Laser scan to BIM visualization.

- PCD – Native to the Point Cloud Library (PCL); ideal for robotics and research applications.

- E57 – Vendor-neutral format that supports large datasets and multiple scan sources.

Compression Techniques That Maintain Quality

- Lossless compression – Reduces file size without altering geometry like LAZ, ZIP, GZIP, etc.

- Attribute-specific compression – Compresses secondary attributes (color, intensity) while preserving coordinates.

- Use software like LASTools, CloudCompare, or PDAL for efficient batch compression.

Format Conversion Best Practices

Always keep an uncompressed master copy for archival purposes.

- Convert to lightweight formats for day-to-day editing or sharing.

- Test conversion results to ensure no loss of critical metadata or precision.

By using optimized formats and smart compression, you can improve file handling speed, reduce storage demands, and streamline data exchange between platforms.

Tip 7: Implement Smart Workflow Management

Managing large point clouds efficiently isn’t just about hardware and software; it’s also about structuring your workflow to minimize downtime and maximize productivity. Smart workflow management ensures smoother processing, faster turnaround, and reduced risk of data loss.

Batch Processing Strategies

- Queue multiple operations like filtering, decimation, and classification to run sequentially.

- Schedule heavy processing tasks overnight or during non-working hours.

- Use command-line tools or scripting to automate repetitive operations.

Breaking Large Datasets into Manageable Chunks

- Split point clouds by zones, floors, or survey sections.

- Process and validate each section independently before merging.

- Load only the required subset into your working session to reduce memory load.

Automated Processing Pipelines

- Use tools like PDAL, FME, or Python scripts to create repeatable workflows.

- Automate file conversion, indexing, and cleaning to reduce manual effort.

Progress Monitoring and Error Recovery

- Enable logging to track batch operations and identify issues quickly.

- Save intermediate results frequently to avoid reprocessing from scratch.

- Use checkpoints for long-running processes, allowing partial restarts if failures occur.

By organizing your tasks into a well-structured, partially automated pipeline, you can handle massive point clouds with fewer interruptions and greater consistency.

Conclusion: Turning Point Cloud Challenges into Seamless Workflows

Managing large point clouds doesn’t have to be a constant battle. By understanding file sizes and system requirements, applying decimation, implementing LOD processing, leveraging cloud solutions, optimizing software settings, using efficient formats, and structuring smart workflows, you can work faster, more efficiently, and without crashes.

Adopt these strategies to make your point cloud processing smoother, more stable, and project timelines more predictable.